ACM Multimedia Asia 2023 Grand Challenge Proposal: PAIR-LITEON Competition

Challenge Title

PAIR-LITEON Competition: Embedded AI Object Detection Model Design Contest on Fish-eye Around-view Cameras

Registration URL: https://aidea-web.tw/acmmmasia2023_pair_liteon

Competition Start Date: 06/15/2023

Challenge Description

Object detection in the computer vision area has been extensively studied and making tremendous progress in recent years using deep learning methods. However, due to the heavy computation required in most deep learning-based algorithms, it is hard to run these models on embedded systems, which have limited computing capabilities. In addition, the existing open datasets for object detection applied in ADAS applications with the 3-D AVM scene usually include pedestrian, vehicles, cyclists, and motorcycle riders in western countries, which is not quite similar to the crowded Asian countries like Taiwan with lots of motorcycle riders speeding on city roads, such that the object detection models training by using the existing open datasets cannot be applied in detecting moving objects in Asian countries like Taiwan.

In this competition, we encourage the participants to design object detection models that can be applied in Asian traffic with lots of fast speeding motorcycles running on city roads along with vehicles and pedestrians. The developed models not only fit for embedded systems but also achieve high accuracy at the same time.

This competition is divided into two stages: qualification and final competition.

- Qualification competition: all participants submit their answers online. A score is calculated. We will announce the threshold at the beginning of the preliminary round. If participants achieve accuracy above the threshold during the qualification round, they will qualify to participate in the final round.

- Final competition: the final score will be evaluated on MemryX CIM computing platform [4] for the final score.

The goal is to design a lightweight deep learning model suitable for constrained embedded system design to deal with traffic in Asian countries like Taiwan. We focus on detection accuracy, model size, computational complexity, performance optimization and the deployment on MemryX CIM SDK platform.

MemryX [4] uses a proprietary, configurable native dataflow architecture, along with at-memory computing that sets the bar for Edge AI processing. The system architecture fundamentally eliminates the data movement bottleneck, while supporting future generations (new hardware, new processes/chemistries and new AI models) — all with the same software.

Given the test image dataset, participants are asked to detect objects belonging to the following four classes {pedestrian, vehicle, scooter, bicycle} in each image, including class, bounding box, and confidence.

Dataset/APIs/Library

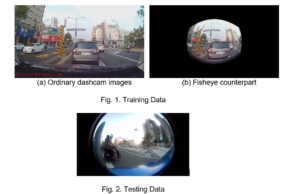

- We provide 89,002 annotated 1920×1080 training images, which is captured from ordinary dashcams mounted on driving vehicles, as well as its fisheye deformation processed version according to real fisheye parameters. The dataset is called iVS-Dataset, with samples of both ordinary and example of fisheyes images shown in Fig. 1. These training images and labeled ground-truth are packed into <ivslab_train.tar>, and ground truth format is similar to PASCAL VOC format. We will provide a conversion program on how to transform the formal normal images into fisheye images. We encourage participants to explore other methods to generate fisheye images. The objects in images have been categorized into four classes: pedestrian, vehicle, motorbike (both motorbike and rider are included in bounding box), and Bike (both bike and rider are included in bounding box).

There are three subfolders.

- JPEGImages: This folder contains all the training images.

- Annotations: This folder contains the image annotations in XML files, which can be parsed using the PASCAL Development Toolkit.

- ImageSets: This folder contains the text file specifying the list of images for the main classification/detection tasks.

- Each image has a direct correspondence with the annotation file. For example, the bounding boxes for n02123394/n02123394_28.JPEG are listed in n02123394_28.xml.

- Public testing dataset

- 1,500 1280×960 test images are packed into <ivslab_test_public.tar> with the same directory structure as the training dataset except for Annotations. These images are captured from fisheye camera mounted on driving vehicles. Sample test data is shown in Fig. 2.

- The public test dataset will be released at 2023/07/01. The participants can submit the results to get the scores online to realize their ranks among all teams.

- Private testing dataset for the final competition

- Only 5% of the 5,000 1280×960 test images are packed into <ivslab_test_private_final_example.tar> with the same directory structure as the training dataset except for Annotations. These images are captured from fisheye camera mounted on driving vehicles. After the final rankings are announced, we will provide the complete Private testing dataset.

Evaluation Criteria and Methodology

- Qualification Competition

The grading rule is based on MSCOCO object detection rule.

- The mean Average Precision (mAP) is used to evaluate the result.

- Intersection over union (IoU) threshold is set at 5-0.95.

The resulting average precision (AP) of each class will be calculated and the mean AP (mAP) over all classes is evaluated as the key metric.

- Final Competition

- Mandatory Criteria

- Accuracy of final submission cannot be 5% lower (include) than their submitted model of qualification.

- The summation of Preprocessing & Postprocessing time of final submission cannot be 50% slower (include) than the inference time of the main model. (evaluated on the host machine)

- [Host] Accuracy (customized mAP, i.e. weighted AP according to Vehicle AP: 10%, Scooter AP: 30%, Pedestrian AP: 30%, Bicycle AP: 30%)–50%.The team with the highest accuracy will receive a perfect score of full score (50%) and the teams that fall below one standard deviation of the accuracy will receive a score of zero. The remaining teams will be awarded scores proportionate to the difference in mAP (mean average precision).

- Mandatory Criteria

-

- [Host] Model size (number of parameters * bit width used in storing the parameters)–20%. The team with the smallest model will get the full score (20%) and the teams that exceed one standard deviation of the model size will receive a score of zero. The rest teams will get scores directly proportional to the model size difference.

- [Host] Model Computational Complexity(GOPs/frame)–15%. The team with the smallest GOP number per frame will get the full score (15%) and the teams that exceed one standard deviation of the model computational complexity will receive a score of zero. The rest teams will get scores directly proportional to the GOP value difference.

- [Device] Speed on MemryX CIM SDK platform–15%. The team with a single model (w/o Preprocessing & Postprocessing) to complete the detection task in the shortest time will get the full score (15%) and the teams that exceed one standard deviation of the speed will receive a score of zero. The rest teams will get scores directly proportional to the execution time difference.

The evaluation procedure will be toward the overall process from reading the private testing dataset in final to completing submission.csv file, including parsing image list, loading images, and any other overhead to conduct the detection through the testing dataset.

Schedule for Competition

| Competition Schedule | Date | |

| Before Competition | Competition dataset Preparation | 2023/03/01~2023/04/30 |

| Beta website | 2023/04/01~2023/06/14 | |

| Website on-line | 2023/06/15 | |

| During Competition | Qualification Competition Start | 2023/06/15 |

| Release Public Testing Dataset for Qualification | 2023/07/01 | |

| Qualification Competition End | 2023/08/04 | |

| Final Competition Start | 2023/08/05 | |

| Release Private Testing Example Data for Final | 2023/08/05 | |

| Final Competition End | 2023/09/4 | |

| After Competition | Award Announcement | 2023/09/17 |

| Release Private Testing Data for Final | 2023/09/17 | |

| Award for Special Session | 2023/12/06 | |

Deadline for Submission

| Important Dates for Participants | Submission Deadline |

| Qualification Competition Submission | 2023/08/4 12:00 PM UTC |

| Final Competition Submission | 2023/09/4 12:00 PM UTC |

| Paper Submission | 2023/10/5 12:00 PM UTC |

Submission Guidelines

- Qualification Competition

Submission File

Upload the csv file naming submission.csv.

Each detected bounding box is in a row, and all detected bounding boxes from the test dataset have to be stored in a single subminssion.csv. The following fields are columns for each detected bounding box.

- image_filename: filename of the test image

- label_id: predicted object class ID(Value must be in {1, 2, 3, 4})

- 1: vehicle

- 2: pedestrian

- 3: scooter

- 4: bicycle

- X: left-top x coordinate of the detected bounding box

- Y: left-top y coordinate of the detected bounding box

- W: width of the detected bounding box

- H: height of the detected bounding box

- Confidence: confidence level of the detected bounding box

Please refer example_submission.csv for example.

Final Competition

The finalists have to hand in a package that includes pytorch/tensorflow model and inference script, as well as the converted ONNX model. We will deploy ONNX model to MemryX CIM SDK platform and grade the final score by running the model.

A technical report is required to reveal the model structure, complexity, and execution efficiency, etc. for each category.

Submission File

Upload the zip file naming submission.zip containing the following files:

- Pytorch/Tensorflow Inference Package

- An official Docker Image will be released by organizer

- The following files must exist in the submitted inference package

- Pytorch or Tensorflow model

- Converted ONNX model

- py [image_list.txt] [filepath of output submission.csv]: It runs your model to detect objects in test images listed in the image_list.txt and creates submission.csv in the [filepath of output submission.csv]. The csv format is identical to the qualification competition. A template of the script will be released and followed.

- Source code of your model: The directory structure of source code shall be illustrated in README.

- techreport.pdf

The technical report that describes the model structure, complexity, execution efficiency, and experiment results, etc.

- cal_model_size_pdf

Explain the details of the model size calculation of the object detection architecture. The size calculation of each layer with parameters shall be included in the report file.

- cal_model_complexity_pdf

Explain detail calculation of the model GOPs/frame based on your detection model.

- TF profiler is allowed in calculating the main model complexity

- For any other layers and processing, please describe the detailed calculation process.

Additional Information

- Team mergers are not allowed in this competition.

- Each team can consist of a maximum of 4 team members.

- The task is open to the public. Individuals, institutions of higher education, research institutes, enterprises, or other organizations can all sign up for the task.

- A leaderboard will be set up and make available publicly.

- Multiple submissions are allowed before the deadline and the last one will be used to enter the final qualification consideration.

- The upload date/time will be used as the tiebreaker.

- Privately sharing code or data outside of teams is not permitted. It’s okay to share code if made available to all participants on the forums.

- Personnel of IVSLAB team are not allowed to participate in the task.

- A common honor code should be observed. Any violation will be disqualified.

Awarding Regulation

All the award winner candidates will be invited to submit contest papers to 2023 ACM Multimedia Asia Workshop for review. According to the paper review results, scoring results of the final competition, as well as the completeness of the submitted documents, the final award winners will be decided and announced. In addition, all the award recipients should attend the ACM Multimedia Asia 2023 Grand Challenge PAIR-LITEON Competition Special Session to present their work, otherwise, the award will be canceled.

According to the above-mentioned evaluation, we select three teams for regular awards, and some teams for the special awards. All the awards might be absent according to the conclusion of the review committee.

For all AI models winning the awards, LITEON has the right to first negotiate with the award winning teams on the commercialization of their developed models.

Regular Awards

- Champion: $USD 1,500

- 1st Runner-up: $USD 1,000

- 3rd-place $USD 500

Special Awards

- Best pedestrian detection award – a award for the highest AP of pedestrian recognition in the final competition: $USD 300;

- Best bike detection award – award for the highest AP of bicycle recognition in the final competition: $USD 300;

- Best motorbike detection award – award for the highest AP of scooter recognition in the final competition: $USD 300;

Reference

[1] Average Precision (AP): https://en.wikipedia.org/wiki/Evaluation_measures_(information_retrieval)#Average_precisio n

[2] Intersection over union (IoU): https://en.wikipedia.org/wiki/Jaccard_index

[3] COCO API: https://github.com/cocodataset/cocoapi

[4] MemryX SDK: https://memryx.com/technology/